A new algorithm termed SMA-YOLO has been introduced to significantly enhance the detection of small objects within the domain of aerial imagery analysis. This advancement directly addresses the inherent challenges faced in remote sensing environments—characterized by high spatial domains, intricate backdrops, and the urgent need for accurate object classification.

In the continually evolving field of remote sensing, drones have emerged as indispensable tools, particularly for monitoring traffic and conducting environmental assessments due to their operational flexibility and robust payload capabilities. However, detecting objects, especially small ones that occupy minimal pixels, presents numerous hurdles. The SMA-YOLO algorithm rises to the occasion, leveraging recent advances in deep learning and attention mechanisms to refine detection efficacy.

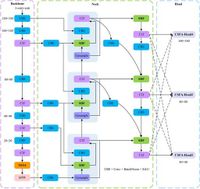

The algorithm introduces a Non-Semantic Sparse Attention (NSSA) mechanism within its backbone network, allowing it to efficiently extract crucial non-semantic features germane to various detection tasks. By eschewing reliance on semantic content, the NSSA mechanism enhances the algorithm’s sensitivity to small objects while keeping its parameter count manageable.

Building upon this foundation, SMA-YOLO integrates the Bidirectional Multi-Branch Auxiliary Feature Pyramid Network (BIMA-FPN), which amalgamates high-level semantic insights with low-level spatial details. The BIMA-FPN maintains a robust aggregate of features that accommodates diverse object scales, critical to achieving effective recognition across various contexts.

Additionally, the Channel-Space Feature Fusion Adaptive Head (CSFA-Head) complements these advancements by adeptly managing multi-scale features, ensuring compatibility and consistency across varying object sizes. This adaptive capability is paramount when operating within the complex scenarios typical of remote sensing tasks.

Substantial results stemmed from experimental validations conducted using the VisDrone2019 dataset, which comprises a diverse array of object classes in aerial imagery. The SMA-YOLO demonstrated a remarkable improvement of 13% in mean Average Precision (mAP) compared to traditional baseline models, showcasing its heightened detection abilities in complex environments.

The VisDrone2019 dataset, utilized for testing, contains over 6,000 images capturing various small object classes such as pedestrians, vehicles, and bicycles, reflecting the intricate nature of urban and rural landscapes. Notably, small objects account for over 60% of the depicted instances, amplifying the need for models capable of addressing these detection challenges effectively.

The evolution of object detection algorithms has historically traversed two primary pathways: two-stage and one-stage methods. Two-stage approaches, exemplified by technologies such as Faster R-CNN and Mask R-CNN, typically generate candidate detection regions before classifying and refining these areas. While these methods boast heightened accuracy, they suffer from slower processing speeds. In contrast, one-stage systems, such as the YOLO (You Only Look Once) frameworks, streamline this process by combining classification and regression within a single step, thereby enabling faster inference times.

Nonetheless, small object detection within drone datasets has remained a convoluted endeavor, often leading to overlooked entities due to their diminutive sizes and the associated dearth of visual data. Prior research has proposed enhancements such as graded loss functions aimed at optimizing detection accuracy, yet challenges persist amidst high-density target environments.

Consolidating its findings, the SMA-YOLO algorithm stands as a significant stride towards surmounting these difficulties, evidenced not only by its mAP improvements but by the ongoing potential to refine the robustness and adaptability of detection models tailored for UAV-use.

By significantly enhancing the representation and detection capabilities of small objects, SMA-YOLO paves the way for a future enriched with improved real-time detection feasibility across various complex scenarios. The implications of such advancements echo far beyond immediate object recognition, opening avenues for enhanced safety in crowded environments and leveraged operational efficiencies in diverse drone applications.

As ongoing research endeavors expand upon the success of the SMA-YOLO algorithm, overcoming limitations associated with scale, domain adaptation, and environmental variability remains paramount. These aims will propel future inquiries toward enhanced model robustness and applicability across differing atmospheric conditions, potentially heralding a new era in remote sensing efficiency.