In a significant advance for artificial intelligence, researchers have unveiled a new class of two-dimensional floating-gate memories designed to enhance the efficiency of large-scale neural networks, which are fundamental to applications such as autonomous driving and image recognition. This groundbreaking technology, termed gate-injection-mode (GIM) two-dimensional floating-gate memories, demonstrates impressive capabilities that may redefine the future of neuromorphic computing hardware.

The study, published on March 18, 2025, reveals that these novel memories can achieve 8-bit states with stability exceeding 10,000 seconds, utilizing a programming voltage of only 3 volts. These metrics place them ahead of existing nonvolatile memory technologies, making them suitable for integration into high-performance neural network accelerators.

The development arose from the necessity for more efficient computing systems that can handle extensive, parallel processing tasks inherent in machine learning and AI. Traditional computational architectures encounter hurdles due to the extensive data movement required between memory and processing units. These inefficiencies have spurred interest in neuromorphic computing systems—architectures designed to imitate the human brain's processing and data storage functionalities.

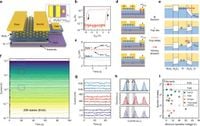

Prior to this achievement, 2D floating-gate memories, while promising, faced challenges like limited endurance and stability. Leveraging materials such as monolayer/few-layer MoS2, platinum, and aluminum oxide, the research team implemented a device structure where the charge control gate is optimally decoupled from the floating gate and channel. This innovative design enhances the retention and stability of stored charges, facilitating an exceptional performance of over 105 cycles, with a remarkable yield of 94.9% in a sample of 256 fabricated devices.

The underlying mechanics of the GIM memory involve a unique bi-pulse programming strategy that enhances stability by minimizing the impact of charge trapping within dielectric materials. As explained by the authors, "This work demonstrates the potential of GIM 2D FGs for high-performance neuromorphic computing accelerators." With these results, the researchers successfully carried out experimental image convolutions using a 9 × 2 device array, showing that their findings align closely with simulation predictions.

Additionally, the GIM memories were tested to transfer approximately 38,592 kernel parameters from a pre-trained convolutional neural network (CNN), resulting in inference accuracies nearing ideal values. This underscores not only the applicability of this technology in AI but also its importance in making edge-computing devices more efficient—capable of processing and storing data locally.

This new memory technology contributes substantially to the field of neuromorphic computing by allowing for significant reductions in power consumption while maintaining high accuracy, which is critical in mobile devices and applications where energy efficiency is paramount.

Looking ahead, the successful implementation of gate-injection-mode floating-gate memories could herald a new era in the development of neural network accelerators, enabling more sophisticated AI applications and improving the interaction between humans and machines. The research not only provides a detailed account of these devices' fabrication and performance but also opens avenues for further exploration in the burgeoning field of neuromorphic hardware.

In summary, the introduction of GIM two-dimensional floating-gate memories represents a promising advancement in the quest for more efficient neuromorphic computing solutions, signifying a crucial step forward in the unwavering march towards smarter, more capable artificial intelligence systems.