The integration of deep learning (DL) systems has the potential to revolutionize medical imaging, yet the practical adoption of these technologies is often complicated by issues related to data quality and diversity. Recently published research introduces the MedMNIST+ dataset collection, created to diversify medical image evaluations with more extensive benchmarks and varied modalities.

Medical professionals are becoming increasingly aware of the possibilities presented by DL, yet the transfer of this technology from laboratory settings to clinical practice remains sluggish. One significant contributor to this bottleneck is the limited and heterogeneous nature of medical datasets available for training DL algorithms. This new study presents findings aimed at addressing these issues, thereby improving clinical applicability.

"Higher image resolutions do not consistently improve performance beyond a certain threshold," the authors note, reflecting the complexity of optimizing image quality for DL. The study systematically reassesses commonly used Convolutional Neural Networks (CNNs) and Vision Transformer (ViT) architectures across various medical datasets, establishing performance metrics intended to reevaluate previous assumptions about these architectures.

The MedMNIST+ benchmark collection expands on the previously limited MedMNIST v2 dataset, which provided medical images at 28x28 pixel resolution, by offering images at 64x64, 128x128, and 224x224 pixel resolutions. This systematic benchmarking analyzes performance variations across these sizes, recognizing shifts and trends relevant to deep learning within the medical field.

The findings suggest potential benefits to utilizing methods less sensitive to higher resolutions, particularly during the prototyping stages of model development, to reduce overall computational demands. The study reiterates, "Our analysis reaffirms the competitiveness of CNNs compared to ViTs, emphasizing the importance of comprehending the intrinsic capabilities of different architectures." With such insights, there's hope for future adaptability and effectiveness of DL models within clinical environments.

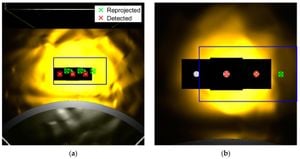

The researchers utilized several DL architectures from both the convolutional and transformer families carefully benchmarked across 12 unique medical datasets. Background imaging modalities—such as X-ray, optical coherence tomography (OCT), ultrasound, and computed tomography (CT)—were represented within the datasets, addressing binary and multi-class classification tasks, among others. This emphasis on diversity is intentional, aiming to elucidate how contemporary algorithmic strategies can flourish under varied operational conditions.

One compelling observation made by the researchers is the performance saturation experienced when images transition from 128x128 to 224x224 pixels. Higher image resolutions yield improvements, but with diminishing returns beyond the 128x128 threshold. This highlights the opportunity for lower-resolution images to be used during development phases to save on computational resources and speed up processing time without sacrificing accuracy significantly.

These revelations come at a time when deep learning is experiencing exponential growth, but there is still uncertainty surrounding its generalizability across diverse populations. The MedMNIST+ dataset enhancements seek to reduce biases and provide researchers with more equilibrated training structures, which is especially pertinent for implementations within varied patient populations.

Importantly, the research advocates for prioritizing the development of computationally efficient methods over merely scaling existing technology to achieve state-of-the-art performance benchmarks. Taking the lessons from the MedMNIST+ study, researchers and practitioners will be encouraged to assess techniques on multiple evaluation benchmarks before directing resources toward achieving optima on singular performance metrics.

Finally, the authors' work implores the medical imaging community to objectively frame the conversation around model architecture by recognizing the strengths of both convolutional and transformer architectures. Future inquiries may prioritize creating larger datasets, embedding unique inductive biases, and forging alliances between the healthcare domain and advanced DL capabilities.

Through the systematic evaluation of the MedMNIST+ dataset, the study not only enriches existing research but also offers pivotal insights for the enhanced implementation of deep learning models within clinical practice. By fostering both transparency and reproducibility, researchers can more readily compare results and accelerate innovation across medical imaging applications.