In the rapidly evolving field of object detection, researchers have taken a vital step towards enhancing detection accuracy while keeping costs manageable. In a recent paper published in Scientific Reports, authors Bei Wang, Bing He, Chao Li, Xiaowei Shen, and Xianyang Zhang propose a cutting-edge relation-based self-distillation framework aimed at improving performance without incurring additional computational expenses.

The core problem within the domain of object detection is the balancing act between accuracy and computational efficiency. As visual sensors and sensor technology advance, optimizing the analysis of data generated becomes essential. The proposed framework leverages a self-distillation method that integrates relational knowledge—information about the similarities and differences within representations of the same category of objects under varied conditions.

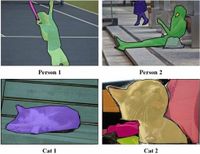

A significant part of this new framework lies in its relation-based self-distillation method, which allows the object detector to focus on understanding the diverse representations of objects in different contexts. By sharpening this focus, the system refines its predictions and improves overall detection accuracy. Furthermore, the paper introduces an adaptive filtering strategy, which ensures that only the most reliable detection results contribute to the training process, eliminating lower-confidence predictions before they impact the learning algorithm negatively.

To validate their method, the team conducted extensive experiments using the MS COCO and PASCAL VOC datasets. The results indicated that their approach not only boosted detection accuracy across various scenarios but did so without imposing additional computational overhead on existing object detection systems.

Many current object detection techniques, both one-stage and two-stage models, have limitations, often requiring larger models to achieve substantial performance improvements. However, the proposed self-distillation framework offers a viable solution to enhance existing models' capabilities without the need for extensive pre-training on a considerably large teacher model, which has previously posed challenges for organizations mindful of implementation costs.

Comparative analysis against established knowledge distillation methods showed that Wang et al.'s approach provides a significant advantage, improving object detection efficacy more consistently. The paper records a notable improvement of approximately 1.5 points in the mean Average Precision (mAP) index for one-stage detectors, and even greater gains (up to 2.3 points) for two-stage detectors like Cascade R-CNN.

Through rigorous experimentation, this paper emphasizes the pressing need for self-distillation methods that efficiently guide detectors in focusing on relational knowledge. Notably, the insightful findings underscore that understanding object relationships within a scene dramatically enhances detection performance.

Moving forward, the researchers aim to explore further applications for their framework, particularly in the area of class-aware learning. Their work paves the way for significant advancements in object detection technology, potentially benefiting various industries reliant on precise visual analysis in real-time.