Recent advancements in electrochemical random-access memory (ECRAM) devices may pave the way for more efficient, brain-like artificial intelligence (AI) applications. Researchers have developed high-performance ECRAM devices designed specifically for analog computing, utilizing a novel approach that harnesses the unique properties of tungsten oxide materials.

These devices address the growing demand for faster and more energy-efficient computational methods in AI as this field continues to expand. ECRAM can potentially serve as a crucial component in analog cross-point arrays due to its programmability, stability, and multi-level switching capabilities.

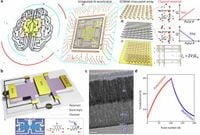

The study highlights the underlying mechanisms that govern ECRAM behavior, including temperature-dependent electron transport properties and how ionic migration in the device impacts conductivity. Through innovative Hall measurements, the researchers measured critical transport parameters and were able to demonstrate the influence of temperature on ECRAM behavior.

By employing a variable-temperature Hall measurement system, they established the oxygen donor level in tungsten oxide at around 0.1 eV. This plays a crucial role in defining the device's performance characteristics, particularly its ability to increase carrier mobility and density at lower temperatures, leading to improved conductance.

Moreover, the conductance potentiation observed during these experiments was directly linked to reversible atomic structure changes, suggesting the potential for enhanced energy efficiency in AI hardware.

The multi-terminal Hall-bar configuration also allowed the research team to perform complex measurements that successfully overcame previous barriers associated with low-mobility materials. With the focus on optimizing ECRAM for practical AI applications, these findings represent a significant step forward in creating capable and reliable neuromorphic computing systems.

The researchers envision that this exploration not only deepens theoretical understanding but also opens doors for future practical applications in AI, such as advanced neural network training that requires both high accuracy and low energy consumption.