In the rapidly evolving field of computer vision, image reflection removal has presented a significant challenge, especially when it comes to high-resolution images. Researchers have long battled with the complexities of removing reflections without compromising on the quality of the background image. Fortunately, a new study led by Songnan Chen and Zheng Feng and published on March 22, 2025, in Scientific Reports introduces a groundbreaking method that could change the landscape of image processing.

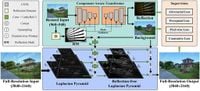

The study unveils the Laplacian pyramid-based component-aware transformer (LapCAT), a novel framework designed to tackle the challenges associated with image reflection removal in high-resolution settings. The authors explain that existing methods often struggle with high-resolution images because they tend to downsample the input, a process that compromises critical image details.

“LapCAT leverages a Laplacian pyramid network to remove high-frequency reflection patterns and reconstruct the high-resolution background image guided by the clean low-frequency background components,” wrote the authors of the article. This approach allows for a more refined solution to the significant hurdles posed by complex reflections.

The issue of reflections in images arises when light is reflected off surfaces, obscuring the actual scene. Current solutions employing generative models have made strides in removing reflections from regular-resolution images but falter when dealing with ultra-high formats like 4K. Chen and Feng's LapCAT addresses this by first applying a Laplacian pyramid, which adeptly separates a high-resolution image into distinct frequency components. This foundational step is critical for understanding the various reflection patterns the algorithm needs to tackle.

The innovative core of LapCAT involves its component-separable transformer block (CSTB), which employs a reflection-aware multi-head self-attention mechanism. This mechanism is guided by a reflection mask generated through pixel-wise contrastive learning, enabling the model to effectively distinguish between reflection constituents and background content. This dual approach of global and local analysis through contrastive learning enhances the model’s capability to deal with high-fidelity images.

The authors conducted extensive experiments on several benchmark datasets, including Real20 and EIR^2. “Extensive experiments on several benchmark datasets for reflection removal demonstrate the superiority of our LapCAT, especially the excellent performance and high efficiency in removing reflection from high-resolution images than state-of-the-art methods,” stated the authors. These findings indicate LapCAT not only improves the quality of reflection removal but also does so more efficiently, highlighting its potential for practical applications in various fields such as photography, video production, and augmented reality.

To summarize, the introduction of LapCAT represents a significant leap forward in the field of image processing. By combining advanced neural network architectures like the Laplacian pyramid and the innovative component-separable transformer block, researchers are now better equipped to address the intricate problems posed by reflection removal in high-resolution imagery. As technology continues to advance, such frameworks are essential for enhancing visual fidelity and expanding the capabilities of computational imaging.