In a significant shift towards utilizing user-generated content for artificial intelligence training, Apple has announced that beta testers of iOS must consent to the use of their content for training its Apple Intelligence models. This new program, which is set to roll out in the forthcoming iOS 18.5 beta version, has raised concerns among users regarding privacy and consent.

According to Apple Insider, the company has implemented a mechanism wherein users who participate in the beta testing program will have their content analyzed for the purpose of enhancing AI capabilities. Notably, users are reportedly unable to opt out of this data usage. Apple claims that the training process will occur entirely on the device, employing a method known as Differential Privacy to preserve user confidentiality. This approach ensures that personal data is not transferred to Apple’s servers for training purposes.

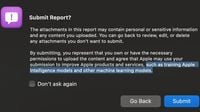

In a recent update, Apple stated, "Apple may use your report to improve products and services, for example to train Apple Intelligence models and other machine learning models." This notification appears when users upload files as part of a bug report, such as a sysdiagnose file. However, developers have criticized Apple for not providing an option to refuse this consent, suggesting that the only way to avoid participation is to refrain from submitting bug reports altogether.

As the Apple Intelligence learning program expands, it is expected to extend beyond bug reports to other areas of the iPhone operating system, including features like Genmoji, Image Playground, and Writing Tools. Users can opt out of the program by disabling analytics in their device settings. This can be accomplished by navigating to the 'Privacy and Security' section and turning off the 'Share iPhone and Watch Analytics' option.

Previously, Apple relied on synthetic data for AI training. However, the company is now shifting to user analytics, which it believes will provide more accurate training data. For instance, Apple may generate synthetic messages and compare them against actual user messages to refine its models. This method allows Apple to identify which synthetic data is most reflective of real-world usage, thereby enhancing the effectiveness of its AI systems.

Meanwhile, a troubling trend has emerged in the realm of artificial intelligence, particularly regarding the capabilities of OpenAI's neural networks, specifically models o3 and o4-mini. Social media users have raised alarms over these systems' ability to determine geographical locations from photographs. After a recent announcement, enthusiasts began testing these models as geographic detectives, analyzing images through a process of reasoning that includes cropping, rotating, and scaling.

This technology, initially designed to assist in research and improve content accessibility, has morphed into a potential threat to privacy. Users on the platform X have shared numerous examples where model o3 successfully identified cities, landmarks, and even establishments from blurry images, menus, or facades. Participants have engaged the AI in a game reminiscent of GeoGuessr, where they attempt to guess locations using Google Street View.

Despite their intriguing capabilities, these models often ignore EXIF data, which contains information about the location where a photo was taken. They also do not rely on previous conversations, making their analyses independent but potentially hazardous. A recent experiment conducted by TechCrunch revealed mixed results between the two models. While both o3 and the older GPT-4o performed similarly in identifying a frame from Yosemite Park, o3 outperformed GPT-4o in another test by correctly identifying a bar in Williamsburg, New York, from a photo of a violet headscarf, while GPT-4o mistakenly suggested a pub in Great Britain.

However, o3's accuracy is not without flaws. In several instances, it produced incorrect associations, linking a Parisian café to a neighborhood in Barcelona and misidentifying an American supermarket as being in Australia. Users have noted that the AI often makes guesses based on secondary details, such as vegetation or architectural styles, rather than concrete evidence.

The primary concern surrounding this technology is the absence of clear safeguards against misuse. An individual with malicious intent could upload a screenshot from someone else's Instagram story to ChatGPT, prompting the system to attempt to identify the location, even without geotags in the image. This raises significant risks of deanonymization.

In response to these concerns, OpenAI has stated that it has implemented security measures, training its models to reject requests aimed at identifying private individuals or analyzing confidential data. A company representative emphasized, "We actively monitor abuse and update usage policies." However, users have reported that these filters appear to function selectively. For instance, while the AI may readily assist in locating a restaurant based on its interior design, it refuses to identify faces in the same images.

While the new features of ChatGPT undoubtedly hold potential benefits in emergency situations or for local history research, the ease with which they can be employed for geographical searches necessitates a reevaluation of digital security standards. As the community shares tips on how to obscure metadata or distort images before sharing them, OpenAI has remained silent regarding its plans to enhance protective measures.

As both Apple and OpenAI continue to navigate the complexities of AI development and user privacy, the implications of these technologies on personal data and security remain a pressing concern for users and developers alike.