The trend of Starter Packs generated by ChatGPT AI is popular on social networks, but experts warn of serious risks to personal data.

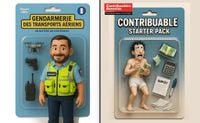

You’ve likely seen the recent wave of virtual figurines packaged with personalized accessories, known as Starter Packs, flooding your social media feeds. These creations, generated by artificial intelligence from your photos, have taken the internet by storm. However, beneath this playful exterior lies a troubling reality: the potential commercialization of your personal data.

Surfshark’s cybersecurity experts are raising the alarm about the data collection practices behind these seemingly innocent Starter Packs. According to Maud Lepetit, Surfshark’s France manager, “These technologies collect and store personal information without users being fully aware, seduced by the playful aspect of the trend.”

To create your personalized Starter Pack, users provide photos and personal descriptions, which feed the algorithms. This information does not merely vanish; it is used to train these algorithms, making them more efficient. In other words, your facial features, interests, and personal characteristics become the raw material that enhances these systems, often without users knowing the exact purposes of this data collection.

Even more concerning is the potential for this data to be exploited commercially. Lepetit explains, “This data can serve commercial purposes, such as reselling data or creating targeted advertising profiles.” Even with a robust privacy policy in place, data breaches can still occur. Your fun Starter Pack today could become tomorrow’s advertising fuel or, worse, be exposed after a data breach.

So, how can users enjoy the trend of Starter Packs without compromising their security? First and foremost, it’s essential to limit the sensitive personal information you share. A smiling face is usually enough; there’s no need to include your full name or compromising details about your life.

Another crucial but often overlooked step is to read the terms of service of the platform you’re using. Although it may seem tedious, understanding the privacy policy and data usage terms can save you significant headaches later. “It’s important to consult the privacy policy and terms of service of any platform to understand how data is processed,” insists Lepetit.

Furthermore, avoid connecting to unsecured networks, such as public Wi-Fi, when using these services. “If you must do so, tools like VPNs can help,” the expert advises, though she naturally advocates for Surfshark’s VPN services. Alternatively, you can use your mobile network via your phone if you prefer not to pay for a VPN.

Lastly, prioritize privacy-respecting alternatives. Not all Starter Pack generators are created equal when it comes to data protection. Look for those that clearly commit to not commercially exploiting your information and limit the retention period of your data. As Lepetit emphasizes, “The security of digital data should never be taken lightly.”

In a related vein, Apple faces its own challenges in balancing user privacy with the need to improve its AI technology. While most AI providers enhance their products by training them with public information and user data, Apple has taken a different route. The tech giant has long touted its commitment to user privacy, and to uphold this, it relies on synthetic data to train and improve its AI products.

Synthetic data, created using Apple’s large language model (LLM), aims to mimic the essence of real data. For example, the AI can generate a synthetic email that resembles a real one in subject and style, assisting it in learning how to summarize actual emails—a function already integrated into Apple Mail.

To further protect user privacy, Apple has adopted an approach known as differential privacy. This method combines synthetic data with real user data to enhance its AI capabilities. As Apple explains in a recent blog post, differential privacy works by creating a large number of synthetic emails on various subjects. Each synthetic message is embedded to capture key elements like language, subject, and length.

These embedded data are then sent to Apple users who have opted in to share analytics on their devices. Each device selects a small sample of real user emails and generates its own messages. Apple then identifies the synthetic records that best match the language, subject, and other characteristics of the user’s emails.

Thanks to differential privacy, Apple can identify the most similar synthetic records without compromising user identity. For instance, if an email about tennis is selected, the AI might generate a similar message by replacing “tennis” with “football,” allowing it to learn how to create better summaries for a wider variety of messages.

So, how does Apple ensure user privacy during this process? The sharing of analytics data is disabled by default, meaning only those who wish to participate in data sharing are involved. Users can easily review, accept, or decline sharing options on any Apple device by navigating to Settings, selecting Privacy & Security, and scrolling down to the Analytics & Improvements setting.

Furthermore, the sampled user email data never leaves the device and is never shared with Apple. Instead, the device sends a signal to Apple indicating which synthetic emails are closest to the user’s real emails, without referencing any identifiable information like an IP address or Apple account.

Apple has previously utilized differential privacy for its Genmoji feature, which uses AI to create personalized emojis based on user descriptions. This technique allows the company to identify popular messages and patterns without linking them to specific users.

Looking ahead, Apple plans to extend the use of differential privacy to other AI features, including Image Playground, Image Wand, Creation of Memories, Writing Tools, and Visual Intelligence. “By leveraging our many years of experience with techniques like differential privacy, along with new methods like synthetic data generation, we can enhance Apple Intelligence features while protecting the privacy of users who opt into our device analytics program,” Apple stated in its blog post.

In summary, the rise of AI-generated Starter Packs brings both excitement and caution. While they offer a fun way to engage with technology, users must remain vigilant about their personal data. Similarly, as companies like Apple navigate the complexities of AI development, the commitment to user privacy will be crucial in shaping the future of technology.