As mobile applications continue to evolve, augmented reality (AR) is rapidly gaining traction across various sectors, from gaming to healthcare. However, these applications face significant challenges, particularly regarding energy consumption and latency on portable devices. Recent developments aim to tackle these challenges with an innovative, AI-driven edge-assisted computing framework designed to optimize performance while conserving battery life.

The growing demand for mobile AR solutions comes with a hefty price: resource-intensive processes such as real-time image processing, object detection, and 3D rendering place a considerable strain on mobile devices. Conventional methods such as local processing can drain batteries quickly, while cloud offloading introduces high latency and security vulnerabilities. To address these issues, researchers have proposed a new framework that utilizes Reinforcement Learning and Adaptive Quality Scaling, promising a more efficient approach to task offloading and energy management.

Mobile AR applications traditionally rely on two primary methods for processing tasks: local processing and cloud offloading. However, local computation rapidly depletes a device’s energy reserves, particularly when handling intensive tasks. Conversely, while cloud processing benefits from greater computational resources, it is hindered by delays inherent in data transmission across networks.

To enhance the performance of mobile AR applications, a novel AI-assisted framework is introduced, allowing for intelligent task management. This framework employs Reinforcement Learning (RL), specifically Deep Q-Networks, to learn optimal task offloading policies. It considers real-time factors such as network status and battery life, dynamically deciding whether tasks should be processed locally or offloaded to edge servers. This adaptation not only aids in energy conservation but also improves user experiences by reducing latency.

"Our method proves to be efficient in improving AR task performance, enhancing battery endurance on the devices, and improving real-time user experience," wrote the authors of the article. By integrating adaptive quality scaling, the framework further manages AR rendering by adjusting quality based on available resources, ensuring users do not encounter significant quality drops even in low-energy situations.

Experiments conducted with this framework yielded impressive results: a remarkable 30% reduction in energy consumption compared to traditional methods, alongside a task success rate exceeding 90% and latency held below the crucial threshold of 80 ms. These findings are paramount in establishing a more sustainable future for mobile AR technologies, creating solutions that balance high performance and resource efficiency.

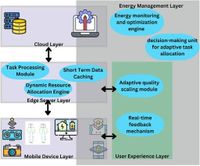

The system architecture consists of multiple layers, each designed to optimize energy management and boost user experience. Key components include the mobile device layer, responsible for basic AR tasks, and the edge server layer, dedicated to handling more intensive computational demands by relieving mobile devices of heavy processing loads. An energy management layer monitors energy usage across these components, striking a balance between effective performance and battery longevity.

Moreover, the proposed framework is not merely responsive; it is predictive. The integration of machine learning enables continuous optimization based on user behavior patterns, further enhancing resource allocation and ensuring users enjoy a premium experience without draining their devices. As mobile AR applications grow in complexity, this dynamic approach addresses the urgent need for methodologies that can swiftly adapt to ever-changing conditions.

Looking ahead, the researchers predict that their AI-based system can improve scalability and applicability in real-world applications such as smart cities, education, and more. They emphasize the importance of future research, aiming to further refine this framework through advanced techniques like distributed learning, enhancing its applicability across diverse operational environments and potentially addressing issues of privacy and security in data handling.

"The proposed framework learns policies online, thus providing efficient strategies in energy consumption and latency," wrote the authors of the article. Their findings not only bolster the case for leveraging AI in edge computing but also pave the way toward the next generation of intelligent mobile applications capable of meeting diverse user needs without faltering in performance.