OpenAI’s latest advancement, the GPT-4o model, has introduced native image generation within ChatGPT, unleashing a new wave of creative capabilities for users. In just one week, more than 700 million AI-generated images have been created, with a popular trend being Studio Ghibli-style art. However, beneath the surface of artistic experimentation lies a far more dangerous use case: the creation of fake Aadhaar cards using ChatGPT’s image generator.

When OpenAI unveiled GPT-4o with photorealistic image generation capabilities, the move was celebrated for its creative potential. Users could create visual stories, concept art, mockups, character designs, and much more—all without leaving the ChatGPT interface. But, as with any powerful technology, the risk of misuse was inevitable. Some users are now using ChatGPT’s image generation to replicate government-issued identification documents, particularly India’s Aadhaar card, which is a key digital identity proof linked to everything from financial services to voter IDs.

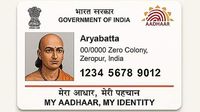

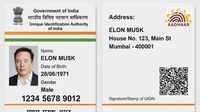

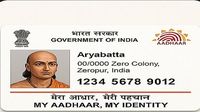

To understand how serious the issue could become, several users and journalists attempted to recreate Aadhaar-style images using ChatGPT’s image tool. The results were visually convincing, mimicking the layout, fonts, QR code style, and official design structure. Although the AI did not replicate exact Aadhaar numbers or QR code data, the visual similarity was enough to raise red flags. The facial details, in most cases, remained inconsistent or stylized. But that limitation may not hold back potential bad actors who could layer these designs with actual data, making detection more difficult.

The Aadhaar system is one of the world’s largest biometric ID programs. With over a billion enrollees, it plays a central role in India’s financial, governmental, and social welfare systems. Any breach or manipulation of Aadhaar identity can lead to financial fraud, fake identity creation, illegal SIM card registration, and unauthorized access to government benefits. The misuse of tools like ChatGPT for generating fake Aadhaar visuals is not just a matter of technological curiosity—it has serious national security and privacy implications.

OpenAI, along with other leading AI companies, has frequently come under scrutiny for enabling potentially dangerous features. This case reopens the question: Should companies release powerful tools without robust misuse detection systems in place? While OpenAI implements content filters, usage monitoring, and watermarking, it is becoming clear that current safeguards are not sufficient to prevent misuse at scale. The ease of access, the photorealistic quality, and the absence of stringent gatekeeping make such tools susceptible to abuse.

This is not the first time AI-generated content has raised alarms. In the past year alone, deepfake videos of politicians have gone viral, affecting elections in multiple countries. AI-generated fake news photos have confused public perception during global conflicts. Scammers have used AI voice cloning for extortion and fraud. Now, the fabrication of government IDs using consumer-facing AI marks a dangerous new chapter.

India, with its massive digital footprint and growing mobile internet base, is especially vulnerable. Aadhaar is not just an identity; it is often used for KYC, banking access, and legal authentication. Cybersecurity experts are urging the Indian government to work closely with AI developers to monitor emerging misuse cases and push for mandatory watermarking of generated images, AI-generated document detection systems, legal penalties for those using AI tools to replicate ID proofs, and awareness campaigns on spotting fake documents.

While OpenAI hasn’t officially commented on the specific case of Aadhaar misuse, the company previously stated that its image tools were designed with restrictions around sensitive or deceptive content. However, real-world tests prove those limitations are not yet effective. This highlights the urgent need for tighter API controls and document-specific filters, regional adaptation of AI safety measures, and partnerships with governments and law enforcement.

The introduction of image generation within ChatGPT is a milestone in AI accessibility, but also a reminder of its darker potential. While the ability to create art, concepts, and learning visuals is revolutionary, misuse cases like fake Aadhaar cards cannot be ignored. As India and other nations adopt and integrate digital identity systems into daily life, guarding against manipulation becomes a national priority. And as AI continues to evolve, tech companies, regulators, and users must collaborate to ensure innovation is not weaponized.